The Role of Simulation Training and Skill Evaluation in Maintenance of Certification

Harrith M. Hasson, MD, John Morrison, MD

Disclosure: Dr. Hasson is the inventor of the SurgicalSIM LTS Simulator.

We are witnessing a paradigm shift and accelerated evolution in surgical education, physician certification and maintenance of certification, and assessment of competency. The Halsted apprenticeship model used for over 100 years is being replaced by an objective curriculum model reflecting tenets of modern learning theory. The main thrust for this change is to keep up with the rapid pace of medical knowledge available to physicians and incorporate these changes into their practices and improve patient care. This change involves 3 basic steps: (1) presenting information with instructions regarding pertinent cognitive and technical skills to be learned; (2) then allowing learners to practice the skills they are acquiring; and (3) providing remedial feedback. Social skills are also pertinent, and in team training models, these can be taught and assessed in a similar way. The cycle is continued until proficiency is achieved as measured by objective metrics.1,2 This model incorporates simulation and skills evaluation at several levels in the process, and as such, these have become integral parts of teaching and testing. This fact is particularly relevant to laparoscopic/endoscopic surgery where the skills needed are fundamentally different from those needed for open surgery. The digital image nature of the surgery makes it possible to objectively teach and evaluate required technical skills by recording and breaking down key components of the skill all in simulated environments.

Current computer-based simulation systems also make it possible to objectively evaluate cognitive and nontechnical skills used in clinical scenarios. This rigorous system of assessment/training is different from traditional methods that rely mainly on subjective evaluations. A paradigm shift defining surgical proficiency based on objective assessment is also underway to be utilized for the credentialing of surgeons for certain procedures and for maintenance of certification.

ADVANTAGES OF SIMULATION TRAINING

Simulation training is becoming an important integral part of surgical training in that it provides obvious advantages, particularly in the present climate of diminished clinical exposure.2 Surgical learning is changing from primarily real life experiences to simulation, because it allows trainees to make mistakes and then learn from their errors in a safe, protected environment. This offers physicians the ability to assess/develop technical and nontechnical skills and to achieve proficiency in such skills through repetitive practice at their own pace. However, it should be noted that expert assistance and remedial feedback are absolutely necessary for learning many of the skills. Proper learning of such skills is not possible without feedback.3

The ready availability of a simulation center and the ability to practice at times that are convenient provide learners with an opportunity to develop and retain skills by engaging in regular practice to protect against skill decay. The use of trainers to “warm up” just prior to performing an actual surgical procedure has also been shown to be of value by improving performance.4 Shifting from real life training to simulation training also allows mentors to easily calibrate the degree of difficulty in the simulated environment as need be. Computer-based simulation training is by nature interactive, and as such, engages participants in an active learning process that is conducive to improved retention of information compared with the traditional passive-lecture format.2 “Tell me and I’ll forget; show me and I may remember; involve me and I’ll understand.” Chinese Proverb. Finally, in a computer-based simulation, all scoring and assessment is done by the machine according to preset values, which eliminates observer bias.

THE AMERICAN BOARD OF SURGERY (ABS) MAINTENANCE OF CERTIFICATION (MOC) PROGRAM

The ABS created the MOC program in 2005 to restructure the traditional recertification process into one of ongoing learning, assessment, and improvement.5 The 6 basic physician competencies (patient care, medical knowledge, professionalism, interpersonal skills, communication skills, practice-based learning and improvement, and system-based practice) are reflected in the 4 components of maintenance of certification: (1) professional standing, (2) lifelong learning and self-assessment (LLSA), (3) cognitive expertise, and (4) evaluation of surgical performance and outcomes.

Professional Standing

Professional standing requires a full, unrestricted medical license verified every 3 years with solicitation of reference letters from the chief of surgery or chief of staff and chair of the credentials committee at primary institutions.

Lifelong Learning and Self-Assessment

Lifelong Learning and Self-assessment (LLSA) requires continuing medical education (CME): 50 hours yearly of which 30 hours must be in category 1 – verified every 3 years. The majority of self-assessment CME must be “active” not “passive” by the second 3-year cycle.5

Cognitive Expertise

Assessment of cognitive expertise has not changed. It requires a secure examination every 10 years that may be taken 3 years prior to expiration of certification.

Evaluation of Surgical Performance

Evaluation of surgical performance outcomes as well as other requirements of the MOC program has not yet been finalized; however, a recent mandate of the ABS requires successful completion of the Fundamentals of Laparoscopic Surgery (FLS) program by graduating resident surgeons, before sitting for its qualifying examination.6 Where and when actual surgical skills testing will be incorporated into the certification process remains to be determined; however, its inclusion we feel is inevitable.

So where and how does simulation and skills evaluation play a role in the Maintenance of Certification process now and in the future?

For professional standing, as stated above, the delegate will be required to have a letter from the hospital regarding operating privileges. Hospitals are increasingly requiring documentation of training to demonstrate competence, particularly with respect to new techniques and procedures. These training sessions, because of the complex nature of the skills being taught, almost always involve use of simulation. When a new procedure or service is being added, most training courses incorporate some degree of either a low fidelity or sophisticated equipment form of teaching, so simulation programs thus play an important all be it indirect role in this part of the certification process.

Credentialing of physicians by hospitals is a complex topic with the decisions on who gets approved for what privileges usually being left up to the individual institution with very little standardization. A recent survey of hospitals across the USA inquiring about their policy regarding the requirement of specialty board certification and maintenance of certification as a prerequisite for obtaining and keeping privileges, demonstrated a smaller percentage than one would expect that require board certification and recertification for continued privileges. Even in hospitals that required certification, there was a wide range of flexibility in granting physicians continued privileges even when these board certifications had expired.7 This seems to be counter to the trend of board certification acquisition that graduates of accredited programs are encouraged to obtain and strive for. In fact, the majority of physicians by contrast to the low level of requirements of the institutions, in which they practice, are voluntarily seeking board certification and recertification.8 Reasons for physician interest in maintaining certification range from local institutional requirements, to a desire to keep up with current knowledge, and for marketing reasons. Advertisements in the lay media encourage prospective patients to inquire regarding their physicians’ board status. So certification and recertification are very important issues.

As simulation matures and data continue to be gathered demonstrating the accuracy of simulation in predicting physician competence, a more uniform and universally adopted method of credentialing would be expected to be adopted by hospitals. At this time, credentialing is currently determined by the institution, ranging from a “laundry list” of privileges where each procedure is evaluated and privileges either granted or denied, to granting of “broad specialty based” privileges where an understanding of that specialty’s range of privileges is granted en-block. The problem with broad, specialty based privileging is that not all surgical practices are identical. Surgeons may have practices weighed heavily in certain areas of their specialty while performing fewer procedures in other areas. This problem of determining individual competency and credentialing is a much more daunting endeavor in this instance, and customized privileging will most likely rely heavily on simulation-based training with its ability to predict competency to assist in granting privileges.

With respect to Lifelong Learning and Self-Assessment, ie, CME, to teach new procedures and concepts, and document learning of the procedure, simulation devices with objective data acquisition and documentation capabilities are playing an increasing role in this educational setting. Simulation programs then play a direct role in this aspect of certification. A review of most major surgical organization meetings will reveal numerous courses on specific procedures that utilize simulation giving the participant the opportunity to either practice the new procedure or gain experience on the equipment being taught.

The use of simulators for evaluating overall surgical performance has its anchor in Team Training exercises, where procedural skills, judgment, and communication skills are all tested concurrently by using simulated operating room conditions. A direct benefit of simulation training was the development of objective measurement systems that can reliably evaluate surgical skill.

So simulation then is intimately involved and will continue to become more so with the maintenance of certification. A recent article in the magazine Discovery also hints at this future in surgical and medical training using simulators (Figure 1).

Figure 1. Discover magazine.

The questions then turn to (1) which simulation programs will be used for training and testing, (2) how will they be used, and (3) how do they evaluate performance?

ASSESSING TECHNICAL SKILLS

Certain inherent abilities are required for a surgeon to perform laparoscopic surgery. These include the ability to operate on a 3-dimensional object from a 2-dimensional video image and to actively develop the psychomotor hand-eye coordination necessary for performing surgery on the projected image.9 Innate abilities are not trainable, but training and practice help surgeons realize their full potential within the constraints of their natural abilities.10

There are 3 levels of skill assessment/training with ascending complexity; first there are basic coordination exercises that assess inherent ability; then there are enabling skills/tasks that duplicate surgical maneuvers: cannulation, clip application, cutting, camera navigation, ligation, suturing, knot tying, and application of energy sources; and finally simulation of entire laparoscopic procedures using virtual reality. Inherent abilities are needed and used to develop basic skills. Combinations of these skills are used to perform a task, and then a series of tasks are integrated to simulate a procedure.9

Basic Skills -> Enabling Skills -> Procedure

Enabling skills/tasks acquired in a simulated environment represent the building blocks for achieving technical proficiency in laparoscopic surgery.11 There is a difference between acquiring laparoscopic abilities and enabling skills/tasks. Basic skills that reflect innate abilities require brief instructions/mentoring. On the other hand, enabling skills and tasks (especially suturing and knot tying) are mentor dependent, because they require a certain amount of learning involving detailed instructions with feedback. In the absence of feedback, proper learning is not possible.3 Feedback can be achieved through a mentor-type system or via a computer program built into a simulator.

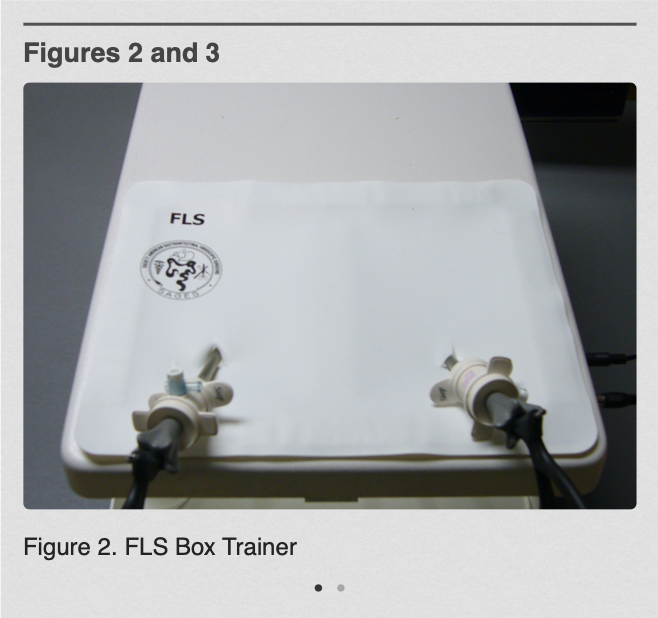

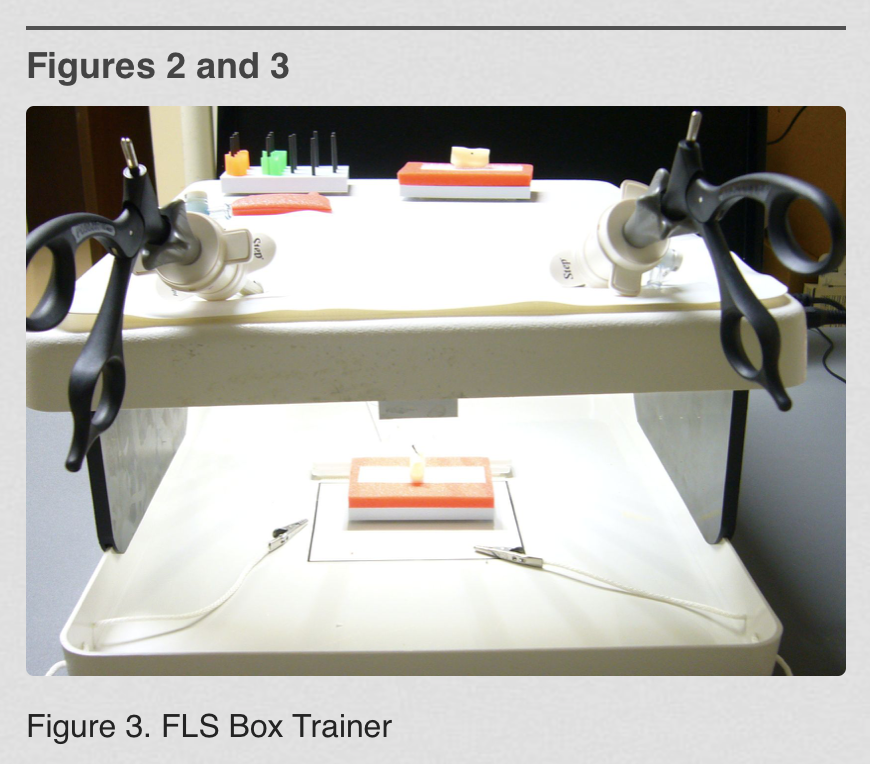

The successful transfer of skills to the OR either acquired by VR (virtual reality) simulators or Box-Trainers have been confirmed.12-15 The manual laparoscopic skills developed in the low-fidelity FLS Box Trainer (Figures 2 and 3) have been shown to be directly transferable to the OR.16

Figure 2. (FLS Box Trainer)

Figure 3. (FLS Box Trainer)

The FLS program is a joint educational offering of the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) and the American College of Surgeons (ACS). It is available to surgical trainees and practitioners and consists of didactic and technical skill components. The didactic component is presented with liberal use of illustrations via multimedia on CD-ROM. It is also available on the Web, so it can provide multiple educational opportunities.17

The skill component utilizes a low-fidelity trainer box with a set of simulated laparoscopic exercises viewed with a built-in 2-D video camera. Routine laparoscopic instruments are used to manipulate the exercises. The manual skill exercises of the FLS box are based on the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS).18 The exercises train/assess specific basic laparoscopic skills that include bimanual manipulation/transfer of objects, precise cutting, intra- and extracorporeal suturing and knot tying, and use of a ligating loop. The performance is assessed with McGill metrics, which rewards precision and speed and penalizes for errors. Currently, the Residency Review Commission (RRC) has mandated that surgery residents completing their training in 2010 and thereafter will be required to successfully complete the FLS course, which has been shown to be a strong predictor and indicator of surgical skill and proficiency.16 Because of the construct and predictive validity of this and other simulator programs, it is anticipated that similar evaluations will become requirements for practicing surgeons seeking recertification as well.

Other more sophisticated basic trainers, such as the METI SurgicalSIM LTS (www.METI.com) which is a computer-based physical reality simulator with embedded McGill metrics and similar skill exercises, add a higher more comprehensive level of basic skills training19 (Figure 4).

Figure 4. LTS Trainer.

The LTS has a basic skills set with data acquisition and documentation capabilities that allow it to demonstrate the participants’ progress on this device. It has been compared with MISTELS with favorable results. Performance on the device (LTS) showed a good correlation with the level of surgical experience of the participant (P=0.000) and with experience with MISTELS (r=0.79). The LTS had greater participant satisfaction when compared with MISTELS (LTS 79.9 vs. MISTELS 70.4; P=0.012).20

The ability of an enhanced program, such as LTS or MISTELS, to objectively collect skill performance data makes it appealing as an unbiased evaluation tool for technical skills assessment.21,22

ASSESSING NONTECHNICAL SKILLS

Nontechnical skills are defined as behavioral aspects of performance in the OR that are not related directly to medical expertise, use of equipment, or drugs.23 They involve cognitive and social skills displayed by individuals working in a team.24 Nontechnical skills are manifested in aspects of performance, such as leadership, decision-making, task management, communication and team working, and situational awareness.23

The importance of nontechnical skills with regard to patient safety was recently reinforced by a study showing that failure to communicate was a primary factor in 43% of errors made during surgery.25 Nontechnical skills were also found to influence the duration and possibly safety of the surgical procedure.24 At the present time, nontechnical skills and team training are not being assessed independently in the certification process, but are being taught at the medical student level as “team training exercises” in a simulated operating room environment (Figure 5).

Figure 5. Team Training.

However, this is expected to change as training tools and measurement methods are developed24 and assessing this aspect of physicians’ ability should then become an integral part of the overall evaluation process.

ASSESSING SURGICAL PERFORMANCE

At this time, assessing intraoperative surgical performance requires review of unedited videos of the surgical procedure. To accomplish this, one approach utilized in Japan was the development and implementation of a system for reviewing unedited videotapes of laparoscopic nephrectomies or adrenalectomies by utilizing simplified criteria to assess the laparoscopic surgical skills of urologists. Qualified applicants with 2 years of laparoscopic experience were enrolled in this evaluation program. The score of a “perfect” procedure is 75 points, with 1 to 5 points deducted for each “dangerous maneuver” or error. More than 60 points are required to pass; 2 referees reviewed unedited videotapes showing the entire laparoscopic procedure and assessed them according to established guidelines. To establish this referee system, 6 experts were first selected and then 23 were chosen from 36 referee applicants. Each referee had completed more than 100 laparoscopic surgeries and was chosen after video assessments by the 6 initial expert referees. Of 5600 certified urologists in Japan, 205 applied to this system in its first year, including the 6 expert referees and 36 referee applicants. After video assessments by the referees, 136 applicants were certified as having appropriate skills, resulting in a 66% pass rate.26

In a similar attempt to objectively evaluate surgical performance, Reznick et al27 modified the Objective Structured Clinical Examination (OSCE) that had been used to assess technical skills, and developed the Objective Structured Assessment of Technical Skills (OSATS) Exam. The surgeon’s performance is assessed by 2 examiners using a global rating scale of 7 dimensions of operative performance: respect for tissue, time/motion, instrument handling, flow of operation, knowledge of instruments, knowledge of procedure, and use of assistants. These dimensions are scored from 0 poor to 5 excellent and a numerical value is obtained.

Reviewing unedited surgical videos according to OSATS or similar criteria is currently the gold standard of proficiency assessment.28 The videos are evaluated by 2 blinded experts who must be in agreement at least 80% of the time (interrater reliability criterion P≥0.80). Although this method qualifies as being objective, it is not perfect, because it still represents subjective evaluations by 2 individuals. Furthermore, the process is labor intensive, time consuming, and it relies on the availability of experienced surgeons to rate the performance.29 In long procedures, the attention span of the reviewers may deteriorate with time and the quality of review may suffer.

To popularize review of unedited surgical videos as a mainstream method for assessing proficiency of surgical performance, some portions of the review will need to be automated and annotated to save time and resources. Additionally, the video review may be limited to predetermined, critical portions of the procedure. The technology for automated review will likely emerge from biometric technological applications, such as facial recognition software currently used in security systems and Internet celebrity search engines.30 The same technology can be extended to laparoscopic surgery in a new application of surgical scene recognition programming. In this case, recognition algorithms are developed to extract features/landmarks from a large number of laparoscopic surgical scenes showing generic and procedure-specific complications. The surgical scenes showing complications are collected and stored in an extensive database. Surgical scenes in the video to be evaluated are compared with those in the extensive database. A match is declared indicating a complication whenever specific features/landmarks extracted from the video of the laparoscopic procedure match those found in the complication database.

Assessment of surgical performance through review of unedited videos is not required for recertification at the present time, but with the availability of software as described above it may become a routine part of the certification process.

ASSESSING PATIENT OUTCOME

Tracking and assessment of patient outcome data over time for complications and quality of life is difficult and time consuming. However, it is an important aspect of surgical practice. At the present time, the MOC program recommends participation in a national, regional, or local outcomes database or quality-assessment process, such as National Surgical Quality Improvement Program (NSQIP) through the American College of Surgeons, Surgical Care Improvement Project (SCIP) through JCAHO, Physician Quality Reporting Initiative (PQRI) through CMS, or the American College of Surgeons (ACS) case log system that must be validated every 3 years.4 The ultimate goal of training and skill acquisition is improved patient outcomes compared with national standards and reduction in complications. Simulation thus plays and will continue to play a significant role in building and maintaining the tools necessary for competent medical care as discussed above.

CONCLUSION

The change in Maintenance of Certification is here. Simulation and skills testing are presently playing a role in certification both directly and indirectly, but will play an increasingly important part in this process as programs are developed and tested. The availability of objective, standardized, quantitative data with good construct and predictive validity generated by these programs makes simulation appealing in the certification process.

A survey of physicians in Oregon showed that 95% of those with time-limited certification plan to undergo recertification.8 It would stand to reason that across the US, Specialty Boards can anticipate similar interest in recertification. Provisions will have to be made to handle this demand, particularly in light of the changes being implemented.

To train and test this number of physicians, there will need to be established centers where simulation can be taught and testing can be performed on practicing surgeons seeking recertification. This will be a much more sophisticated environment than that used previously for certification, which consisted of a large room where multiple-choice tests were given. The American College of Surgeons foresees that such sites will play an integral part in this recertification process and encourages the development of level 1 and 2 centers to allow physicians’ access so that they can adequately train, acquire, and maintain their knowledge and skills.31

At Louisiana State University Health Sciences Center (LSUHSC) in New Orleans, Louisiana, which has a Level 1 ASC accredited facility, we held a seminar on March 13, 2009 for practicing surgeons who had not had previous experience with simulation and skills testing (Figure 6).

Figure 6. LSUHSC Learning Center.

Included in the seminar were talks regarding the changes in Maintenance of Certification and their ramifications, basic skills training, ie, FLS skills training, team training using simulated operating room scenarios with participants engaging in role changing, and procedure-specific training, ie, retroperitoneal exposure techniques for control of bleeding using a cadaveric model.

Participants in the program felt that the program and information about MOC changes was very helpful and relevant to their practice (Personal communication, John Paige, MD, Director of Center for Advanced Learning Isidore Cohn Center LSU New Orleans, Louisiana, 2010).

We feel that this model or one similar to it will become common place in the future where surgeons will have access to and train at centers in a simulation environment, to acquire new skills, maintain already learned skills, and be tested as part of the recertification process. This process will play a major role in the assessment of ability and thus competence of surgeons and assist in the credentialing process.

These changes should ultimately result in improved patient care, allow physicians to keep up with the rapid changes in medicine, and we hope also help in the matter of tort reform by objectively demonstrating competence.

Embracing this evolving technology and learning environment is necessary if one is going to maintain certification and competence in surgery.

Address correspondence to: Harrith M. Hasson, MD, 6250 Winter Haven Rd, NW, Albuquerque, NM 87120-2645, USA. Telephone: (505) 792-0240, Mobile: (773) 294-5440, Fax: (505) 792-0241, E-mail: drhasson@aol.com.

References:

- Baker DP, Day R, Salas E. Teamwork as an essential component of high-reliability organizations. Health Serv Res. 2006;41:1576-1598.

- Kneebone R. Evaluating clinical simulations for learning procedural skills: a theory-based approach. Acad Med. 2005;80:549-553.

- Mahmood T, Darzi A. The learning curve for a colonoscopy simulator in the absence of feedback: no feedback, no learning. Surg Endosc. 2004;18:1224-1230.

- Do AT, Cabbad MF, Kerr A, et al. A warm-up laparoscopic exercise improves the subsequent laparoscopic performance of Ob-Gyn residents: a low-cost laparoscopic trainer. JSLS. 2006;10:297-301.

- Nussbaum MS. Invited lecture: American board of surgery maintenance of certification explained. Am J Surg. 2008;195:284-297.

- Kavic MS. Maintenance of certification. JSLS. 2009;13:1-3.

- Freed G, Dunham K, Singer D. Use of board certification and recertification in hospital privileging. Arch Surg. 2009;144(8):746-752.

- Bower E, Choi D, Becker T, et al. Awareness of and participation in maintenance of professional certification: a prospective study. J Contin Educ Health Prof. 2007;27(3):164-172.

- Satava RM, Cushieri A, Hamdorf J. Metrics for objective assessment. Surg Endosc. 2003;17:220-226.

- McMillan AM, Cushieri A. Assessment of innate ability and skills for endoscopic manipulation by the Advanced Endoscopic Psychomotor Tester: predictive and concurrent validity. Am J Surg. 1999;177:274-277.

- Hasson HM. Core competency in laparoendoscopic surgery. JSLS. 2006;10:16-20.

- Valentine RJ, Rege RV. Integrating technical competency into the surgical curriculum: doing more with less. Surg Clin North Am. 2004;84:1647-1667.

- Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236:458-463.

- Grantcharov TP, Kristiansen VB, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P. Randomized clinical review of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91:146-150.

- Gurusamy K, Aggarwal R, Palanivelu L, Davidson BR, Systematic review of randomized controlled trials on the effectiveness of virtual reality training for laparoscopic surgery. Br J Surg. 2008;95:1088-1097.

- Fried GM. FLS assessment of competency using simulated laparoscopic tasks. J Gastrointest Surg. 2008;12:210-212.

- Cook DA, Levinson AJ, Garside S, Dupras DM, et al. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300:1181-1196.

- Derossis AM, Fried GM, Abrahamowicz M, Sigman HH, Barkum JS, Meakins JL. Development and validation of a model for training and evaluation of laparoscopic skills. Am J Surg. 1998;15:482-487.

- Hasson HM. Simulation training in laparoscopy using a computerized physical reality simulator. JSLS. 2008;12:363-367.

- Sansregret A, Fried GM, Hasson H, Klassen D, Lagace M, Gagnon R. Choosing the right physical laparoscopic simulator? Comparison of LTS2000-ISM60 with MISTELS: validation, correlation and user satisfaction. Am J Surg. 2009;197:258-265.

- Heinrichs L, Lukoff B, Youngblood P, et al. Criterion-based training with surgical simulators: proficiency of experienced surgeons. JSLS. 2007;11:273-302.

- Soyinka AS, Schollmeyer T, Meinhold-Heerlein I, et al. Enhancing laparoscopic performance with the LTS3E: a computerized hybrid physical reality simulator. Fertil Steril. 2008;90(5):1988-1994.

- Yule S, Flin R, Paterson-Brown S, Maran N, Rowley D. Development of a rating system for surgeons’ non-technical skills. Med Educ. 2006;40:1098-1104.

- Catchpole KR, Giddingo AEB, Hirst G, Dale T, et al. A method for measuring threats and errors in surgery. Cogn Tech Work. 2008;10:295-304.

- Gawande AA, Zinner MJ, Studdert DM, Brennan TA. Analysis of errors reported by surgeons at three teaching hospitals. Surgery. 2003;133:614-621.

- Matsuda T, Ono Y, Terachi T, et al. The endoscopic surgical skill qualification system in urological laparoscopy: a novel system in Japan. J Urol. 2006;176:2168-2172.

- Reznick R, Regehr G, MacRae H, Martin J, McCulloch W. Testing technical skill via an innovative ‘bench station’ examination. Am J Surg. 1997;173:226-230.

- Aggarwal R, Grantcharov T, Moorthy K, Milland T, Darzi A. Toward feasible, validated and reliable video-based assessments of technical surgical skills in the operating room. Ann Surg. 2008;247:372-379.

- Dath D, Regehr G, Birch D, et al. Toward reliable operative assessment: the reliability and feasibility of videotaped assessment of laparoscopic technical skills. Surg Endosc. 2004;18:1800-1804.

- Jain A, Ross A, Prabhakar S. An introduction in biometric recognition. IEEE Transactions on Circuits and Systems for Video Technology, Special Issue on Image- and Video-Based Biometrics. 2004;14(1)4-20.

- Sachdeva A, Pellegrini C, Johnson K. Support for simulation-based surgical education through American of College of Surgeons – Accredited Education Institutes. World J Surg. 2008;32:196-207.